Table of Contents

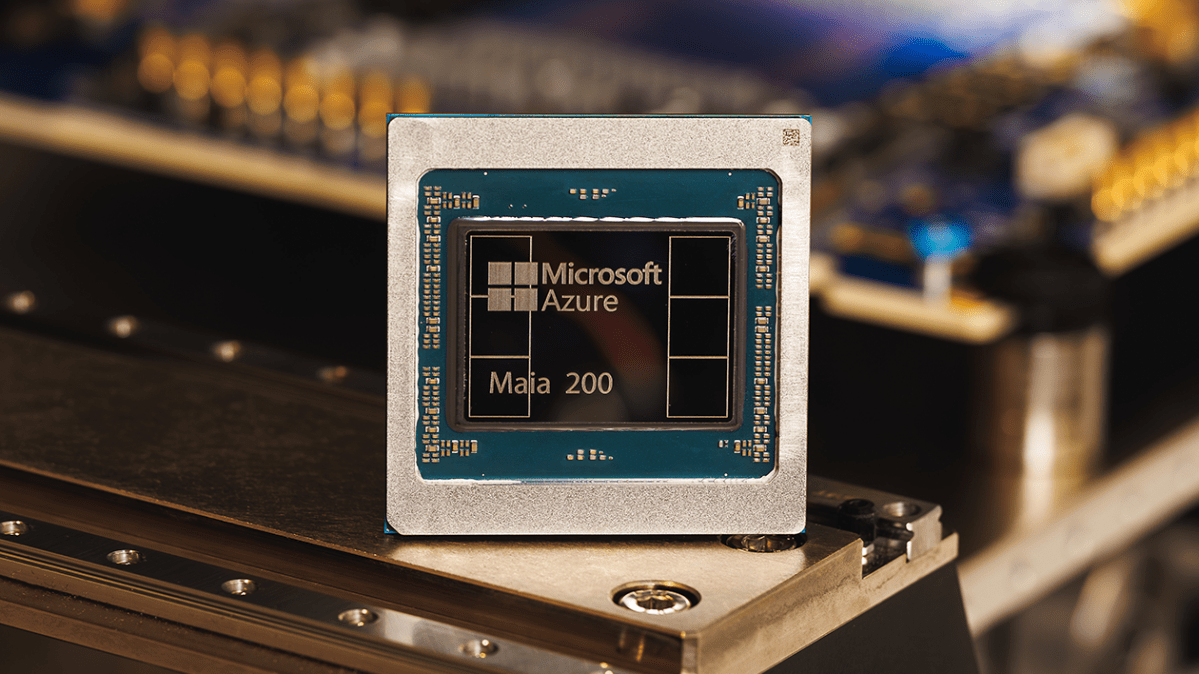

Microsoft Unleashes Maia 200: A Game-Changer for AI Inference

On January 26, 2026, Microsoft announced the Maia 200, its most advanced AI chip yet, positioned as a 'silicon workhorse' for scaling AI inference. This follows the Maia 100 from 2023 and packs over 100 billion transistors, delivering over 10 petaflops in 4-bit precision and about 5 petaflops in 8-bit performancea major leap in speed and efficiency.

Why Inference Matters in the AI Economy

Inferencethe process of running trained AI modelsnow dominates operational costs for AI firms as models mature. Unlike training, which is compute-intensive but episodic, inference runs continuously on user queries, driving up expenses. Microsoft claims one Maia 200 node can handle today's largest models with room for future giants, reducing disruptions and power consumption.

Market Impact: Breaking Nvidia's Grip

The launch intensifies competition among tech giants developing custom chips to escape Nvidia's dominance. Nvidia GPUs remain pivotal, but supply shortages and high costs push alternatives. Google's TPUs offer cloud-based compute, while Amazon's Trainium3, launched in December 2025, targets similar optimizations. Maia 200 fits Microsoft's Azure ecosystem, potentially lowering inference costs by 20-30% for clients running large language models.

- Performance Specs: 10+ petaflops (4-bit), 5 petaflops (8-bit), optimized for real-time AI tasks.

- Efficiency Gains: Reduced power draw enables denser data centers, cutting operational expenses.

- Deployment Scale: Designed for Azure, supporting hyperscale AI services like Copilot.

For enterprises, this means cheaper AI deployment. A cloud provider using Maia 200 could serve 2x more inference requests per watt than Nvidia H100s, based on Microsoft's benchmarks. Analysts predict this could shave billions from global AI infra spend by 2027.

What It Means for Investors and Competition

Stock watchers note Microsoft's hardware push bolsters Azure's edge against AWS and Google Cloud. Nvidia's market cap, hovering near $3 trillion, faces pressure as clients like Microsoft internalize chip design. Ricursive's $4B valuation for AI chip design tools signals investor bets on this shift.

Maia 200's rollout coincides with surging inference demandOpenAI's ChatGPT handles 8.4 million weekly science queries alone. Custom silicon like this could drop per-query costs from cents to fractions of a penny, unlocking mass-market AI apps in healthcare, finance, and autonomous systems.

Future Implications: Toward Ubiquitous AI

By 2028, expect hybrid data centers blending Nvidia training with inference-specialized chips like Maia 200. This democratizes AI, enabling smaller firms to compete. Microsoft VP Kevin Weill's parallel to 2025's coding AI boom suggests science workflows accelerate next.

Risks remain: custom chips lock users into ecosystems, and fab capacity at TSMC limits scale. Yet, Maia 200 positions Microsoft to capture 15-20% more AI cloud market share, reshaping a $200B+ inference economy.

One node running frontier models today hints at exaflop clusters tomorrow, powering agentic AI that reasons across domains. The chip war escalatesNvidia must innovate faster, or giants like Microsoft redefine AI infrastructure.